With the COP26 conference well underway the focus of the world media is on how to reduce emissions and reach net zero carbon globally. As highlighted in a recent paper by EMBL (1) the Life Sciences industry has a key role to play in the mission to prevent climate change.

Every day, scientists around the world perform experiments to find the next life-saving drug or develop the next drought-resistant crop. Much of that work involves single-use sterile plastics and materials that are an obvious target for reducing our impact as an industry on the planet. Most large Life Sciences companies have policies in place to tackle waste and recycle where possible. Bayer AG for example have set themselves the target of net zero GHG emissions by 2050(2); Pfizer aims to reduce its waste disposal rate by 15% (3) and Syngenta aims to reduce their carbon intensity of our operations by at least 50% by 2030 (4).

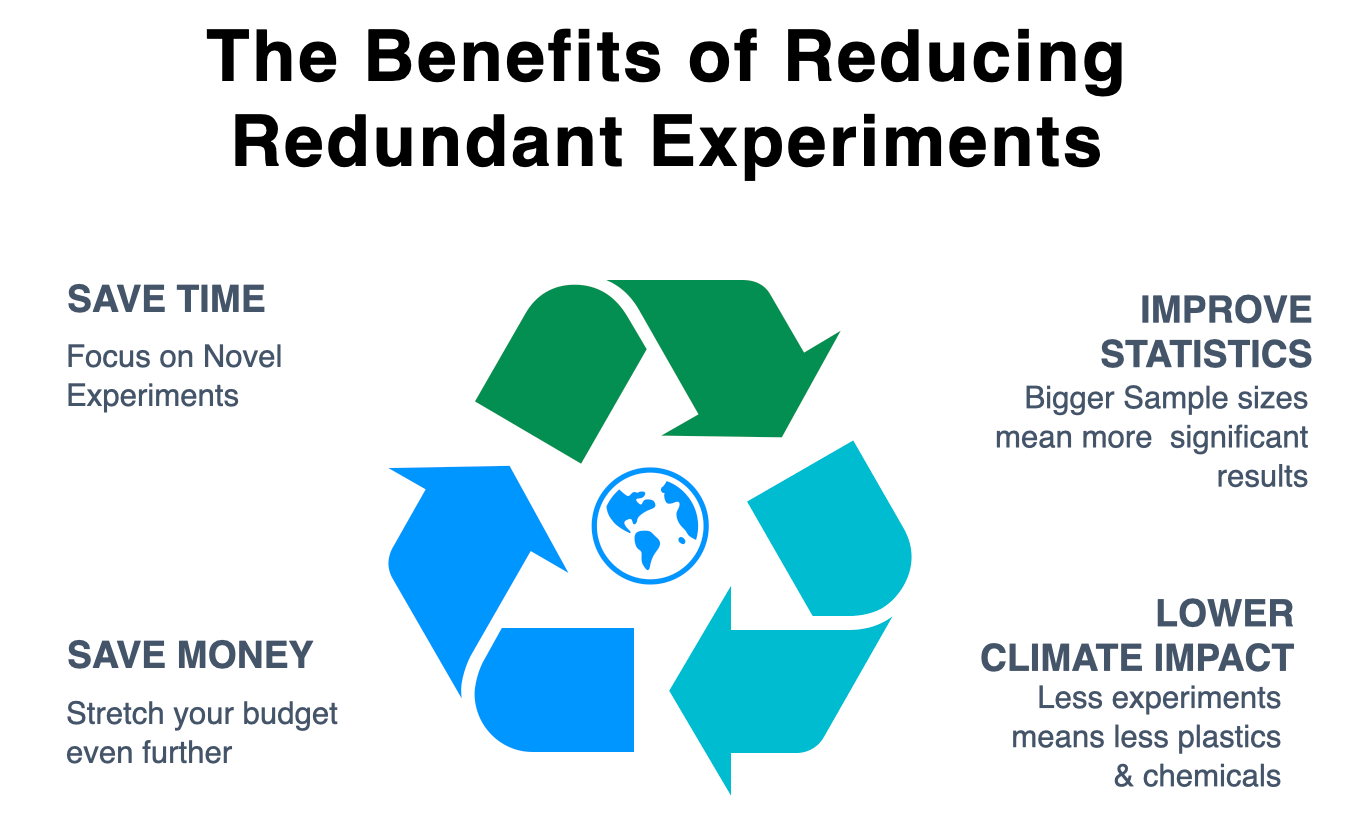

Whilst these goals are crucial there is one further area that as an industry we need to examine: the number of redundant experiments performed globally every single day. From discussions with our clients we estimate that up to 50% of experiments could be eliminated by better use and management of the data we as a community already have.

So why don't we make use of the data we already have? Thousands of experimental data sets are produced every year. Some are made public as part of publication and collaboration efforts and some data is held privately by organisations. But why aren’t researchers making use of their internal data pools as well as the vast external public data sources available to them?

The simple answer is it's not as easy as it should be. It's true that there is a huge amount of public data out there but there are almost an equal number of combinations of ontologies and vocabularies used to annotate them. This leads to a chicken and egg situation where you need to know exactly what search terms to use to discover what data exists.

Just trying to see what samples are available for a simple search like " show me all the samples for Non-smoking men over 40, with non small cell lung carcinoma and overexpression of c-Myc and a P53 mutation" is an almost impossible task currently due to the number of variable terms and the need to search both metadata and normalised experimental results concurrently.

Enforcing data models and controlled vocabularies on the historic data sets is such a monumental task that it's unlikely to ever be achievable without some major steps forward in AI powered curation.

So maybe reducing experimental redundancy using public data alone is not easy right now but at least we are able to use our own organization’s internal data pools’ right?

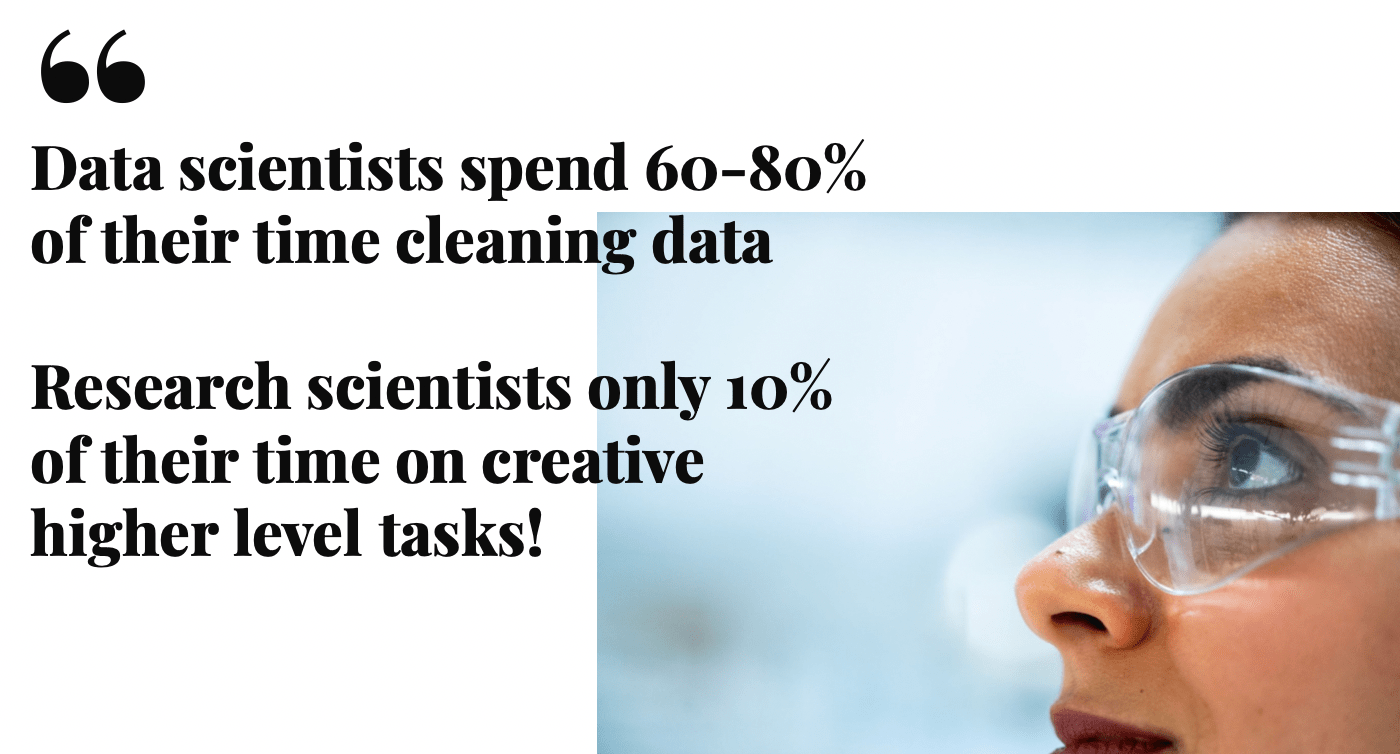

Unfortunately not. A recent presentation from Merck stated that Data scientists spend up to 80% of their time cleaning data. Most scientists we speak with don't know what data the lab next door is even producing that may be of use. They're confused about where to even start looking for the data between LIMS, ELNs, cloud storage, equipment pipelines and external collaborator FTPs.

So what's the solution? How do we stop data scientists and bioinformaticians having to spend their time manually correcting and curating data sample by sample? How do we empower researchers to reduce their impact on the climate by including historic data in their experimental planning?

The simple answer is good data management solutions. Data is precious and valuable. Repeating unnecessary experiments not only impacts the climate but slows down the pace of innovation - ultimately costing lives and adding more degrees to the ever increasing global temperature.

Many organizations have taken on the monumental task of building their own solutions whilst commercial organizations have provided off-the-shelf options to solve the ever growing need. [What makes a good data management solution? Watch this space for our upcoming article!]

To reduce the number of experiments and ultimately lower the climate impact of the crucial work the Life science industry we need more organizations to take data management seriously. We as a community need to insist on reuse of historical and public data as part of the experimental planning process. We need to help our peers see the benefits of reducing redundancy go beyond just saving money but have real world impact too. We need to see companies add reducing experimental redundancy to their Climate Change and sustainability policies.

Looking after our planet is more than reusing your cup at Costa. It's about making best use of all the resources that have gone before.

So, when you're planning your next experimental series ask yourself - How can I make sure I'm not repeating something someone else has already done?

If you can't get that information in less than a minute then drop us a line and let us show you how easy it could be for you to do your bit in the fight against climate change.

- (1) https://www.embl.org/news/lab-matters/life-sciences-have-key-role-in-developing-climate-change-solutions/

- (2) https://www.bayer.com/en/sustainability/climate-protection

- (3) https://www.pfizer.com/purpose/workplace-responsibility/green-journey/climate-change

- (4) https://www.syngenta.com/en/sustainability/climate-change

Genestack aims to advance life-changing research through enabling faster life science breakthroughs. Innovating at pace requires a solid foundation of well managed and curated data. Genestack's solutions provide scalable Life Science data solutions that empower fast view and extraction of all of the relevant experimental, phenotypic and metadata to empower your research.

Related:

> Wellcome Sanger Institute adopts Genestack’s Genestack ODM for Human Genetics datasets