Originally published on the Technology Networks website: Part One | Part Two

In the first of a two-part blog series, we discuss the advantages of a modular approach to bioinformatics with Dr Misha Kapushesky, CEO and Founder of Genestack. Previously a Team Leader at the European Bioinformatics Institute, we also learn about Misha ‘s move from Institute to industry.

Ruairi Mackenzie (RM): When you were a team leader at the European Bioinformatics Institute was there a challenge you encountered that inspired you to set up Genestack?

Misha Kapushesky (MK): Yes. When I came to the Institute in 2001, it was only a few years old and my first role was as a programmer. One of my first tasks was to take a set of modules which are what we call data managers and make them available for, in the wider sense, the community so that they could run simple analyses themselves. My second task, done in parallel, was to organize public data so that people could get access to the data so that they could do the analyses. And a major challenge turned out to be to join those two things. On the one hand, we had tools to analyze data, on the other hand, we had the data itself which was an archive of essentially zip files. And to join the two of them, people would download the zip files and then re-upload them to those tools. When I became the team leader, one of the first things that we set up was a mechanism that would allow them to interrogate the data that’s sitting in the archives without having to push it through this analytical process; it was a code called Expression Atlas. This tool was really a major success and that was one of the first tools where a large public data collection became not just an archive of files to download but really something that you could interrogate, you know – ask “When and where is this gene active?” “Under what conditions?” and so on.

The challenge that arose there was “How do I view my own data in this context?” Pharma companies started asking us whether we could make this tool, this environment, available to analyze locally. We made that possible and we were distributing the tool together with the data and providing some support. Pharma companies paid us a bit to do this at EBI.

And I realized that what would be great is if I could make tools like this easy to use. That the infrastructure for putting data together with the right analytical tools and building nice interactive data mining interfaces was missing. And we were at the best place in the world for doing it.

So that was the sort of challenges that led me to start up this company, because ultimately the job of a SaaS provider is not to develop replicable infrastructures; his job is really to be optimal at serving data up. But what I noticed is that as data volumes in the world increase and the costs of data production are dropping, every pharma company, every life science research organization, every biotech, every consumer goods company, every medical institution, will have the same challenges.

It took us three years to build this first atlas, and I wanted to have an infrastructure where I could do it in a demo session, you know, in 30 minutes. Just grab these modules, pull them together, and tada, you have your own expression atlas. That was the impetus behind it.

RM: You’ve suggested that a modular approach is the answer to the data woes of many scientists – how can this approach help?

MK: Having modules is critical. It’s one of those things that I think by now pretty much everybody in the industry recognizes, and the reason why having a system that’s composed of relatively independent and replaceable modules gives you flexibility, gives you longevity and gives you control over the information of the system.

If you look at what happens in different R&D organizations, who are at the cutting edge of science and tech and the way that they do things, the data mining processes tend to be dated and complex, and so they have, up until now, had essentially two options.

One option is to build everything in-house. Maybe outsourcing some key elements, but let’s build our own data management infrastructure. However, by the time it’s done the infrastructure won’t consider whatever data type or instrument has come out and we have to do it again. And you don’t have to look far to see examples of this; really good succinct presentations were given on this as recently as last month at Bio-IT World Conference 2018.

So, the second option is: you bring in a service provider who creates an infrastructure for you, and it’s good because you can get going quickly and it’s also very good for the service provider because you’re locked in. You’ve got pay for it as moving data around is difficult and expensive.

As a result, what people are really after now is a combination of these. This is really what our option gives you. What we’re saying is that by providing you with a set of modules, you can pick and choose, and you can build for yourself an Life Science Data ecosystem.

You can create a very flexible and optimizable data architecture. So, we take on the most basic, common, underlying layer and that module of ours is compact. These are common user paths which are fundamental for all kinds of data management, not even specific to biological.

And then we develop individual modules for different paths and these modules work independently. In fact, if you’re looking at genomic data, if you see there is a better module than Genestack you can grab it. And this means that you can use your own analysis pipeline; you can use open source packages; you can use commercial analytical providers for pipelines like Spotfire. We’re quite agnostic, we provide several modules building blocks that you can use to build up a multi-layered system.

We’ve got this ability to integrate with other things, but the key is that it’s really easy for us to introduce modules that capture new emerging datatypes. If there’s one constant about the multi-omics world it’s that it’s always changing. Every two or three years, there’s a new advance in instrumentation. We had microarrays, then we went to next-generation sequencing, and now we’re in the single cell analysis world. So, it’s moving, and every two or three years we have a new thing. You have to develop new modules each time.

We have a system that can evolve with the industry and it provides a flexible data architecture and it means the organization, the pharma company in this case, are in control as to which bits we have to offer they can use and which bits they can bring in from the rest of the industry. So that I think is an important development, to offer this third way between having a customized vendor solution or building it all in-house.

In the second of a two-part blog series, we talk with Genestack CEO Misha Kapushesky on the developments in Bioinformatics that he’s most excited about, and the latest additions to Genestack’s bioinformatics platform.

Ruairi Mackenzie (RM): You were recently presenting at Bio-IT World 2018. What are the developments within the bioinformatics industry that you are most excited about?

Misha Kapushesky (MK): I’d say that there are three developments that I’m really excited about. One of them is the elephant in the room which you cannot really ignore; the possibilities that are opened up by advances in machine learning and associated tools. It’s really exciting stuff, and I think that you can see that in the collaborations that are happening between pharma companies and young start-ups like ours and the resources these companies put into developing means to bring diverse data together to make better predictions.

At first, machine learning was used in areas where you had imaging data, which is rich in volume and sits well into the sort of classic machine learning formulation or categorization. But now we’re talking about conversational interfaces, we’re talking about large-scale networks of data and extracting knowledge out of them. For this all to work you need to have a significant human input into having clean and organized data and metadata; it’s not just about having more data but good quality data. This is a pretty important development; I think it’s an interesting evolution where some of the routine things that humans have been doing will be taken up by algorithms, but this will free up humans to do exciting intellectual things. So that’s an exciting development.

Another development that I’m excited about is that up until recently, multi-omics and generally Life Science Data really belonged purely to the research domain. And now it’s being recognized that the clinical domain is interested in Life Science Data. So, clinical trials now collect Life Science Data. When you talk about a new drug’s development, one starts to look at patient omics profiles and again you’ll see what used to be kind of translational medicine now edging even further into the clinical part of the drug development cycle.

The third thing is that it’s exciting to see that the precision of what we are measuring about our genomes is increasing. You look at the origins of genomics and genetics; we had Mendel, who would classify peas as either wrinkled or smooth – that’s the extent of what we knew about. Then we grasped the existence of genes and were able to understand that there is such a thing as mRNA which corresponds to gene activity and we were able to put it on a gel and get a readout: “bright versus not very bright”. So that was already something. Then microarrays came along, and we were able to measure this on a log scale, to say with clarity that “this is four-fold brighter, so we have four times more expression”. Then, we invented sequencing. But all of this was, you know, fairly large-scale measurement. Now we’re talking about single cell measurement, which was an exciting newcomer, let’s say a year or two ago. Now we’re getting routine data sets of millions of cells, where you can get an idea of what’s going on in the genetics at the level of an individual cell. And I think that the next step is to analyze a single cell without taking that out of the body and over time. We will have a complete picture of what each cell in your body is doing over your lifetime. That’s kind of the holy grail goal. We’re not there yet, but that’s the goal.

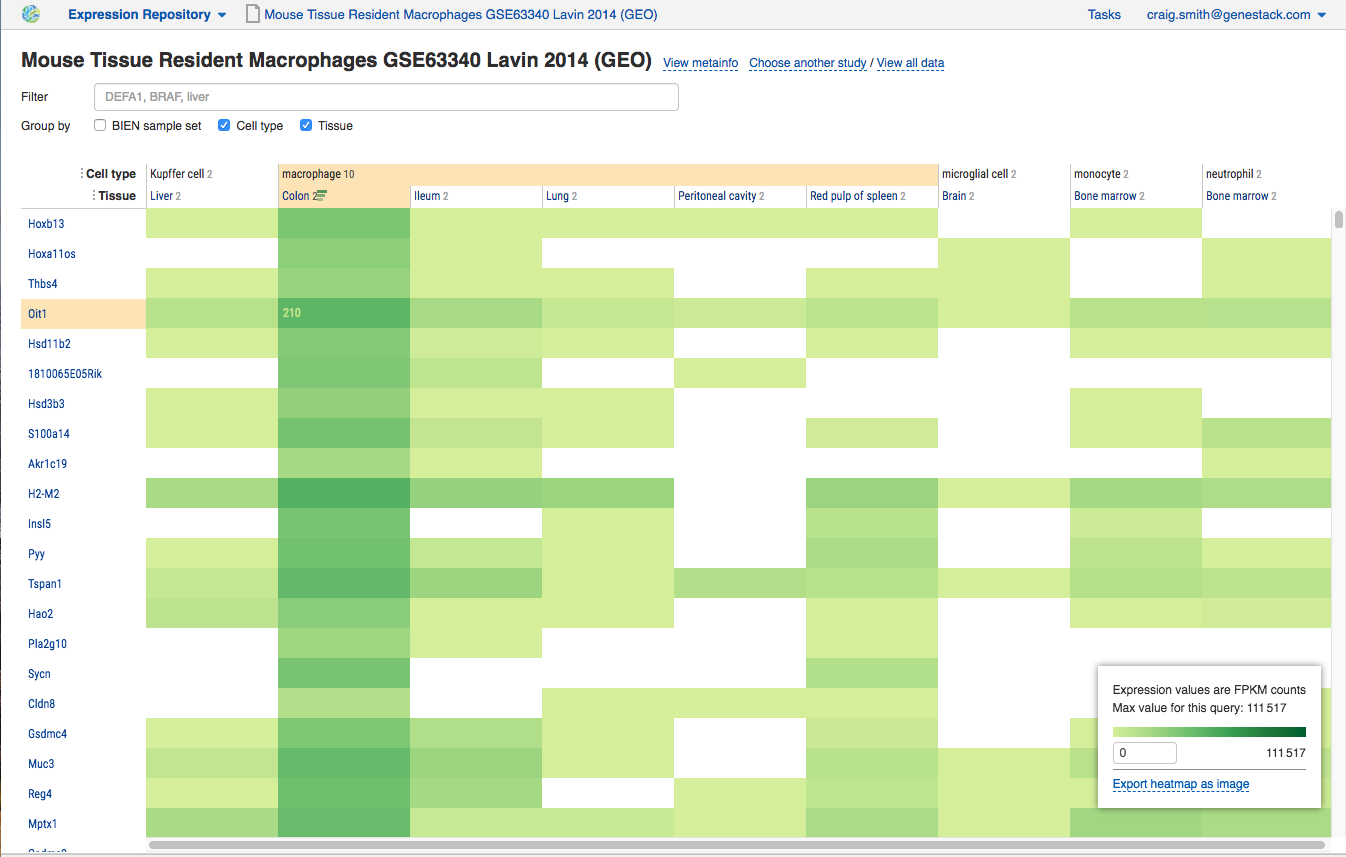

RM: At Bio IT World, you were presenting your Expression Data Miner module, a transcriptomics tool – why have you decided to focus on this omics with your latest module?

MK: There’s several reasons. It’s less to do with transcriptomic data somehow being more difficult to handle than other data. It’s to do rather with the relationship between the biologist, the researcher, the bioinformatician and the data manager. Currently, to some degree all Life Science Data is siloed, pretty difficult to find, pretty difficult to organize.

One of the first things that we have developed is a single place where all the, in this case, transcriptLife Science Datasets are searchable in one place from across all public sources. If you have your own collection of this data type you can also bring it in. This is just the first module, and the following modules that we have got underway we will be tackling additional Life Science Data types.

In drug discovery, one of the first things you do is identify and validate targets. And the ability to understand gene function using that transcriptLife Science Data is going to be one of the first data types that you look at. You want to know if your target gene is expressed in a particular tissue and only in that tissue; in other words, if you want to target it in the lungs, you don’t want also for this gene to be active in the brain and the heart tissue and elsewhere, so you can minimize off target effects. You also want to make sure that the gene is only active during the disease condition as opposed to all the time. Having your information well-organized and easy to find gives you the ability at certain stages to be quite confident about whether to pursue the target or not, and it fulfills an important chunk of requisite information about deciding on a target.

In this decision-making process, there is a big role for a bioinformatician and a data manager. We can save these people a huge amount of time and a huge amount of money usually spent aggregating data collection and pushing it through an analytical pipeline, essentially to answer more or less the same questions that arise time and again in these early stages of drug discovery.

The idea for us is to free up the time of a bioinformatician and data manager and give the task of identifying and interpreting gene activity directly to the biologist, meaning the bioinformatician and data manager can spend their time on building more complex, interesting analytics than commonly is the case.

I would also add that the technology that is available to us today means that we can make things that are truly interactive, truly real time, really graphically appealing and we can capitalize on the new technology development purely from a user experience point of view, so it’s an exciting time.

Misha Kapushesky was speaking with Ruairi J Mackenzie, Science Writer for Technology Networks

Originally published on the Technology Networks website: Part One | Part Two