Several of us have enjoyed the benefits of a pet during enforced isolation due to the coronavirus pandemic, but how intelligent do you think your pet is? Could they perhaps count the number of items shown to them and tap out that number with their paw? Could they perform feats of arithmetic, such as adding fractions together and converting them into decimals?

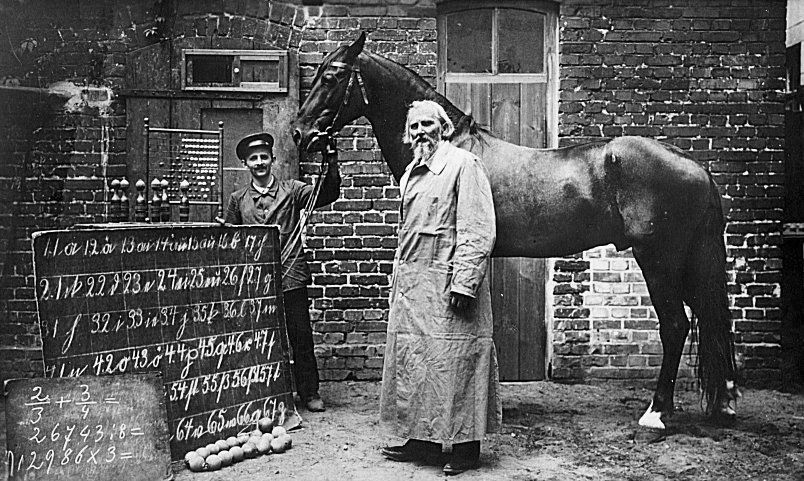

If you’re astonished by the thought then you share that feeling with audiences at the beginning of the 20th century when they were introduced to Clever Hans. Clever Hans was a horse who appeared to be able to answer mathematical and other intellectual challenges, thus giving the impression of human intelligence - so one of the first AIs was actually ‘Animal Intelligence’.

It was eventually discovered that Clever Hans was responding to cues, such as tensing up, that his questioner was giving, rather than actually comprehending german and mathematics himself. To make matters more intriguing, the questioner wasn’t aware of these cues, and would even continue to produce some kind of cue when trying to suppress them.

Machine Learning algorithms display impressions of intelligence in a remarkably similar manner: presented with particular questions they can output answers that seem intelligent. But just as Clever Hans was in fact behaving according to a set of inputs that people weren’t aware of, so ML is also at risk of picking up a correlation between something unexpected in the data and a particular outcome, and discovering the actual deciding factor(s) can be tricky.

Related Article Driving precision medicine and the evolution of clinical data management Pharma is beginning to recognize the value of their existing data assets and using these to empower future research. In this industry article we reflect on this transformation.Ideally you want the inputs that correlate to a particular classification (say) to make scientific sense - number of legs having a correlation to some kind of animal classification for example. But ML algorithms don’t know what makes sense or not, and just as Clever Hans generated outcomes by reading cues from his handler, ML can find correlation with some kinds of input that don’t serve to help when the model is used more generally. In other words, it might be useless once deployed where such spurious inputs aren’t present or don’t correlate in the same way.

So what can we do about it?

The mystery of Clever Hans was solved through careful examination and by controlling the inputs available to him. A similar approach to data is also key for the use of AI/ML that is deployable in the real world.

1. Pass only inputs of interest to your algorithm

It’s tempting to just let ML loose on all the data you can access in the hope of finding a descriptive model, but the simplest way of helping ML generate models that can be applied in the real world is to focus on inputs that are of interest in the real world, i.e. feature selection. For image data that could mean pre-processing data to remove non-subject matter such as date-stamps etc. For Life Science Data that could mean removing factors that you know to be independent of the hypothesis you are testing. Having a system that can export data for factors of interest in a consistent manner across different metadata and data objects is useful.

2. Pass all relevant data to your algorithm

Conversely, it’s important to ensure that you provide all of the relevant data, minimising missing data, for your chosen factors. Errors can occur with omission, or lack of access, so having accessible data and some kind of system that flags up missing data is vital.

3. Make sure your data are accurate

Obviously you want your data to be as accurate as possible, but in this time of metadata-as-data the accuracy and consistency of metadata is just as important. Misspellings or Excel formatting auto-corrections can prevent ML from generating an accurate model, so, like flags for missing data, having validation for accuracy (perhaps against appropriate controlled-vocabularies) is also very useful. Finding inaccurate data is one thing, but additionally having the tools to correct them as easily as possible can be the difference between insight generation or endless data wrangling chores.

4. Make sure your data are comparable

Whether that’s combining data from multiple sources and controlling bias, or just being sure that all your sample metadata are harmonized. Metadata models, together with ontologies/controlled-vocabularies, are key.

There was intelligence in the story of Clever Hans, but the intelligence was behind the cues that were presented to Clever Hans. Intelligent input is equally key to enabling successful use of ML. Making that as easy as possible (through a tool like Genestack ODM) in turn ensures that your intelligence goes on actioning insights, not on mucking out the data stable.

Related:

> Wellcome Sanger Institute adopts Genestack’s Genestack ODM for Human Genetics datasets